Trevor Paglen explores the unseen networks of power that monitor and control us, documenting secret US government bases, offshore prisons and surveillance drones. In the run up to his show at Milan’s Fondazione Prada (until 24 February 2020), Paglen collaborated with the artificial intelligence researcher Kate Crawford to launch ImageNet Roulette, an online interactive project which revealed the often racist or misogynistic ways in which ImageNet—one of the largest online databases that is widely used to train machines how to read pictures—classifies images of people.

At London’s Barbican, Paglen is again examining ImageNet’s classifications, starting from everyday objects like apples and moving towards more abstract concepts to arrive at the category of “anomaly”. We spoke to him about surveillance, AI and how we can begin to imagine a different future.

Tim P. Whitby/Getty Images for Barbican Centre

The Art Newspaper: In 2015, I joined you on a scuba-diving expedition off the coast of Florida to see the fibre-optic cables that carry internet communications between continents. You found them as part of your exploration into how governments spy on their citizens. Is your latest research related to that inquiry?

Trevor Paglen: All of these projects morph from one to the next. Looking for the ocean cables was a result of being involved with Citizenfour [the documentary about the whistleblower Edward Snowden] and trying to understand the infrastructures of surveillance. There’s the National Security Agency and the Central Intelligence Agency but there is also Google, which modulates our life in different ways but is much bigger.

Looking at how large-scale computing and data collection platforms incorporate images leads to a whole series of questions: what are the practices that go into machine learning applications? What are the politics of collecting photographs on an enormous scale? What happens with that shift away from people reading photographs?

There are two ways in which training sets of images for machine learning are made. One is done by universities and shared through people doing research and we can look at those sets—for example, ImageNet, which was created by researchers at Stanford and Princeton in 2009. These sets were made with images taken from people’s Flickr accounts without their permission. They were then labelled [by crowdsourced workers], sometimes in really misogynistic or racist ways. Ethically, it is very murky. What does it mean to go out and appropriate these images, label them and then use them in machine-learning models that are ubiquitous? What are the politics behind it?

The other training sets are created by companies like Facebook and Google, and are proprietary.

These machine-learning sets are used for facial recognition technologies. Won’t this increased surveillance make us all safer?

We have a desire to want to find technological solutions to questions that are political and sociological. Technology is seductive. It offers the promise of a quick fix or the illusion that it is objective and less messy than the hard work required when thinking about very difficult cultural questions. I want to think very carefully about what problems you are trying to solve with this kind of technology. The other thing to bear in mind is that we’re not talking about machine learning in the abstract in a conceptual vacuum. Google, Amazon, Facebook and Microsoft are companies that are in the business of making money.

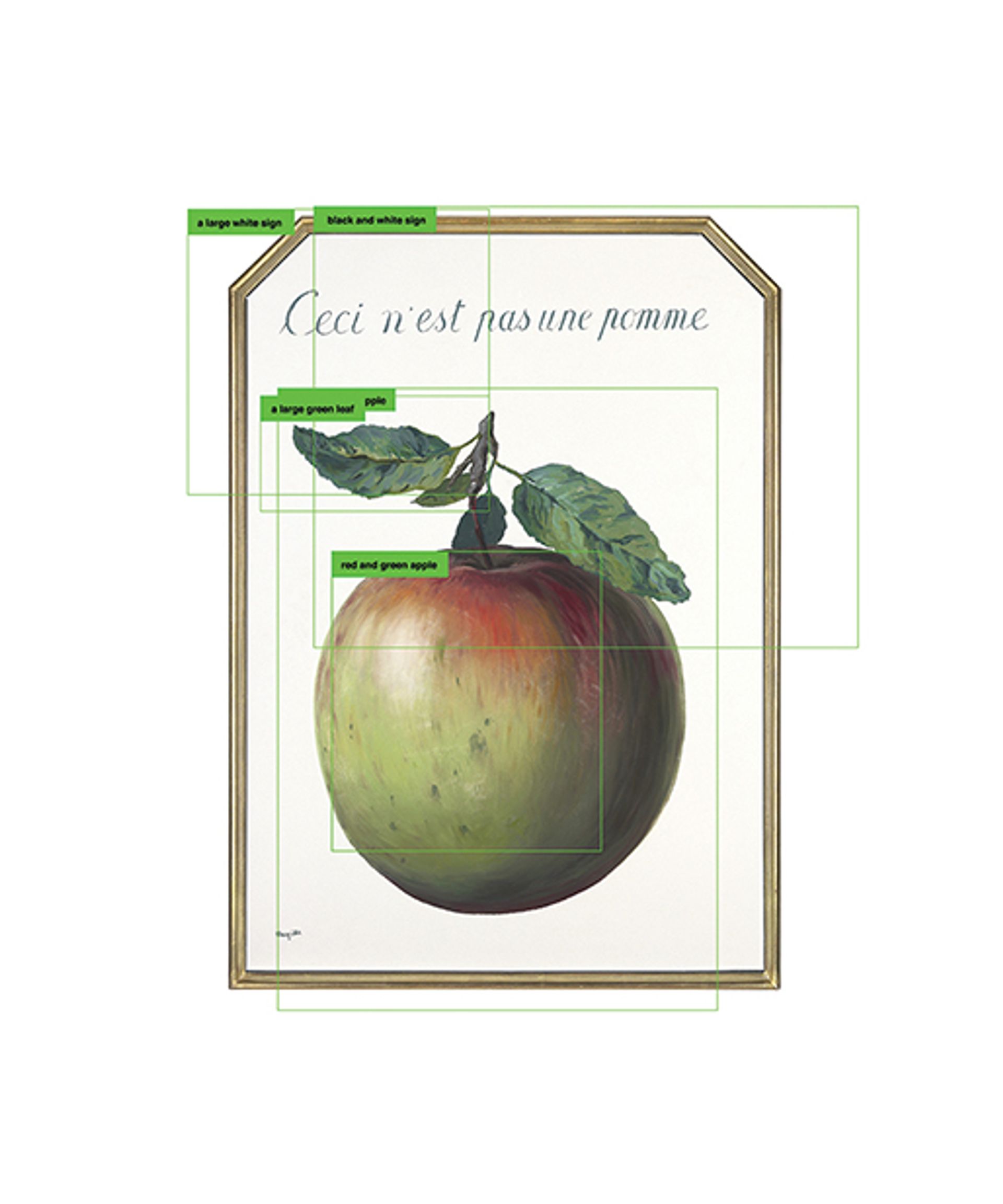

At his Barbican show the US artist embarks on a journey into ImageNet’s classifications, beginning with everyday objects like an apple and progressing towards abstract categorisation © Trevor Paglen, Courtesy of the Artist, Metro Pictures New York, Altman Siegel, San Francisco

And yet we all freely choose to give them our data.

I don’t think we consent to giving all our data to these platforms all the time. I could not do my job without a smartphone. So, I am compelled to use Apple or Google and give them my data. The more these technologies become a part of our lives, the less ability we have to actively consent to participating in them. We cannot change things on an individual level: if one person throws away their smartphone, it’s not going to change the business model of the internet. We should think about larger, regulatory structures. I’m not saying this has to be done on a government level, but it’s certainly not on an individual level.

There are a lot of different levels on which these debates can take place.There are widespread, public conversations that involve a lot of people. That’s important. Another important conversation is among technology professionals, the people building these systems trying to critique these problems. Within the arts it is also very important to think about these issues. We are the people who make images. We can think of facial recognition as political portraiture attached to law enforcement.

It’s important to bring people who have relevant expertise but don’t necessarily have a background in computer science to bear on this because these conversations are often restricted to computer science departments where people don’t necessarily have the expertise to think about how societies and images work; so it’s really vital that we are all engaged.

So, what’s an alternative vision for the future?

It’s important to imagine futures in which things are not inevitable. Right now, it feels like it is inevitable that Facebook and Amazon and Google are going to suck up data; we think it’s inevitable that we are going to be under surveillance and policed. We should not accept this. We don’t really give our information to Facebook. Facebook and other platforms take it. They don’t even know why; they just think it might be useful in the future. There’s nothing inevitable about that. What do we want our mobile phones to do? How do we articulate a response to surveillance capitalism? We need to think about this.

• Trevor Paglen: From “Apple” to “Anomaly”, the Curve at the Barbican Centre, London, until 16 February 2020